Evolution of k8s worker nodes

Evolution of Kubernetes Worker Nodes and the architecture of CRI-O and Red Hat Openshift 4.x

Just a few months back, I never used to call containers as containers…I used to call them docker containers…when I heard that OpenShift is moving to CRI-O, I thought what's the big deal…to understand the “big deal”…I had to understand the evolution of the k8s worker node

Evolution

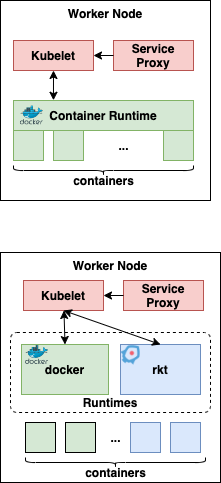

If you look at the evolution of the k8s architecture, there has been a significant change and optimization in the way the worker nodes have been running the containers…here are significant stages of the evolution, that I attempted to capture…

Stage 0 : docker is the captain

It started with a simple architecture of kubelets as the worker node agents that receive the command from admins, through api-server from the master node. The kubelets used docker runtime to launch the docker containers (pulling the images from the registry). This was all good…until the alternate container runtimes, with better performance & unique strengths, started appearing in the market, we realised that it would be good if we can plug and play these runtimes...the obvious design pattern we would use to fix this issue is ??? “adapter/proxy” pattern…right?? that led to the next stage.

Evolution is all about adapting to the changes in the ecosystem

Stage 1: CRI (Container Runtime Interface)

Container Runtime Interface (CRI) spec was introduced in K8s 1.5. CRI also consists of protocol buffers, gRPC API and libraries. This brought the abstraction layer, and acted as an adapter, with the help of gRPC client running in kubelet and gRPC server running in CRI Shim. This allowed a simpler way to run the various container runtimes.

Before we go any further…we need to understand what all functionality is expected from container runtimes. Container runtime used to manage. downloading the images, unpacking them, running them, and also handle the networking, storage. It was fine… until we starting realizing that this is like a monolith!!!

Let me layer these functionalities into 2 levels.

- High level — Image management, transport, unpacking the images & API to send commands to run the container, network, storage (eg: rkt, docker, LXC, etc).

- Low Level — run the containers.

It made more sense to split these functionalities into components that can be mixed and matched with various open-source options, that provide more optimizations and efficiencies…the obvious design/architecture pattern we would use to fix this issue is ??? “layering” pattern…right?? that led to the next stage.

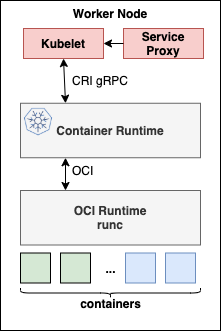

Stage 2: CRI-O & OCI

So the OCI (Open Container Initiative), came up with a clear container runtime & image specification, which helped multi-platform support (Linux, Windows, VMs etc). Runc is the default implementation of OCI, and that is the low level, of container runtime.

The modern container runtimes are built on this layered architecture, where Kubelets talk to Container Runtimes through CRI-gRPC and the Container Runtimes run the containers through OCI.

There are various implementations of CRI such as Dockershim, CRI-O, containerD.

Towards the end of Stage 1, I mentioned the flexibility to create a toolkit for end to end container management… and that needed Captain America to assemble the avengers, to provide an end to end container platform…

Avengers of k8s world - led by Captain “OpenShift”

- podman: deamonless container engine, for developing managing and running OCI containers, and speaks exact docker CLI language, to the extent where u can just Alias it

- skopeo: a complete container management CLI tool. One of the best features I love about skopeo, is the ability to inspect the images, on the remote registry, without downloading or unpacking!!!…and it matured into a full-fledged image management tool for remote registries, including signing images, copying between registries & keeping remote registries in sync. This significantly increases the pace of container build, manage and deploy pipelines…

- buildah: a tool that helps build the OCI images, incrementally!!!..yes incrementally…I was playing around this the other day. I don’t have to imagine the image composition, and write a complex Dockerfile..instead, I just build the image one layer at a time, test it, rollback (if required), and once I am satisfied, I can commit it to the registry…how cool is that!!!

- cri-o: light-weight container runtime for k8s…will write more about this in the next section.

- OpenShift: End to end container platform…the real Captain!!

Red Hat OpenShift goes for CRI-O

Red Hat OpenShift 4.x defaults to CRI-O as the Container runtime. A lot of this decision (in my opinion) goes back to the choice of building an immutable infrastructure based on CoreOS, on which the OpenShift 4.x runs. CRI-O was obvious with CoreOS as the base, and all the more, CRI-O is governed by k8s community, completely Open Source, very lean, directly implements k8s container runtime interface…refer these 6 reasons in detail

Here is a great picture taken from this blog, that shows how CRI-O works under the wood in Red Hat OpenShift 4.x

References

- https://www.projectatomic.io/blog/2017/06/6-reasons-why-cri-o-is-the-best-runtime-for-kubernetes/

- https://www.redhat.com/en/blog/red-hat-openshift-container-platform-4-now-defaults-cri-o-underlying-container-engine#

- https://kubernetes.io/blog/2016/12/container-runtime-interface-cri-in-kubernetes/

- https://www.redhat.com/en/blog/red-hat-openshift-container-platform-4-now-defaults-cri-o-underlying-container-engine

Get similar stories in your inbox weekly, for free

Share this story:

Vijay Kumar A B, IBM Distinguished Engineer @ IBM

AB Vijay is a IBM Distinguished Engineer & CTO for CAS Manage & Application Innovation Lab. He is a IBM Master Inventor, who has more than 58 patents filed in his name. He has more than 22 years experience in IBM. He is a recognized as subject matter expert for his contribution to advanced mobility in automotive, and has led several implementation involving complex industry solutions. He specializes in mobile, cloud, containers, automotive, sensor-based machine-to-machine, Internet of Things, an

Latest stories

Best Cloud Hosting in the USA

This article explores five notable cloud hosting offers in the USA in a detailed way.

Best Dedicated Hosting in the USA

In this article, we explore 5 of the best dedicated hosting providers in the USA: …

The best tools for bare metal automation that people actually use

Bare metal automation turns slow, error-prone server installs into repeatable, API-driven workflows by combining provisioning, …

HIPAA and PCI DSS Hosting for SMBs: How to Choose the Right Provider

HIPAA protects patient data; PCI DSS protects payment data. Many small and mid-sized businesses now …

The Rise of GPUOps: Where Infrastructure Meets Thermodynamics

GPUs used to be a line item. Now they're the heartbeat of modern infrastructure.

Top Bare-Metal Hosting Providers in the USA

In a cloud-first world, certain workloads still require full control over hardware. High-performance computing, latency-sensitive …

Top 8 Cloud GPU Providers for AI and Machine Learning

As AI and machine learning workloads grow in complexity and scale, the need for powerful, …

How ManageEngine Applications Manager Can Help Overcome Challenges In Kubernetes Monitoring

We tested ManageEngine Applications Manager to monitor different Kubernetes clusters. This post shares our review …

AIOps with Site24x7: Maximizing Efficiency at an Affordable Cost

In this post we'll dive deep into integrating AIOps in your business suing Site24x7 to …

A Review of Zoho ManageEngine

Zoho Corp., formerly known as AdventNet Inc., has established itself as a major player in …