Istio - This is what you need to know

Developing software applications that follow the microservice architecture patterns has become the de-facto standard for greenfield projects. In addition to that, these days, migrating from monolithic to microservices is a trend.

Introduction

Developing software applications that follow the microservice architecture patterns has become the de-facto standard for greenfield projects. In addition to that, these days, migrating from monolithic to microservices is a trend.

The microservice architecture indeed solves many problems, such as the scalability, maintainability, and reliability of applications. However, it also brings new challenges that need to be solved to maintain healthy and reliable software.

As a result of these new challenges, several tools have been developed over the past years to make it easier for engineers to run the software in a microservice architecture. One of the tools that are used for traffic management of microservices is Istio. This tool is used for controlling the traffic between microservices running in a Kubernetes cluster and helps in providing traceability and observability of the interactions between the services.

In this blog post, we will present the components of the Istio service mesh and illustrate how we can use it to run microservices on production environments. The blog post will cover the following topics:

- Istio architecture and components.

- Istio benefits and use cases.

- A demonstration of how to integrate Istio with Kubernetes and how to manage the deployed services on the cluster.

Requirements

Before proceeding, make sure that your environment satisfies these requirements. Start by installing these dependencies on your machine: Docker, Kubernetes, and Git.

What is Istio?

Istio is an open-source platform-independent service mesh that focuses on providing the following services for running applications in a microservice architecture;

- Traffic management.

- Policy enforcement

- Telemetry collection.

Although Istio is initially supporting Kubernetes-based deployments, however, it is built in a way that makes it easy for adapting and supporting other environments. The platform is built and designed to manage the communications between the services without the need to perform any changes on the services code base by providing an automated baseline for distributed tracing, routing functionality, traffic resilience, and other features for controlling service-to-service communications. Furthermore, Istio is an open-source project and has an active community.

In the case of Kubernetes, Istio extends the Kubernetes API by defining new API resources such as Gateway,VirtualService, DestincationRule, and many more using Kubernetes CustomResourceDefinitions. This feature allows managing and controls the communications between the services using the same YAML format used by Kubernetes.

Istio Architecture

As you can see in the above image taken from Istio’s official documentation, the latest Istio architecture consists of only two core components: The Envoy Proxy and the control plane “Istiod”.

Below is a brief overview of each of these components

Envoy

Envoy is an L7 proxy and communication bus designed for large modern microservice architectures. Istio is using an extended version of the original Envoy proxy. As you can see from the architecture diagram, the envoy proxy is the only Istio component that interacts directly with the data plane and to the running services.

Istio deploys the Envoy proxy objects as sidecar objects to the running services. This means that Istio injects the Envoy proxy object in the running Pod instances for the services. For instance, in case you have a service deployed with two Pods, Istio will inject an Envoy proxy container in each of the running Pods. The Envoy containers will share the resources with the running containers in the Pod, such as the Pod IP address. As a result, the incoming and outgoing traffic from the Pod containers can be managed by the Envoy proxy container. This is not the only built-in feature of the Envoy Proxy, in fact, there are several features such as dynamic service discovery, load balancing, TLS termination, health checks, fault injection, and rich metrics.

In addition to the built-in features provided by the Envoy proxy, Istio provides a set of tasks and features to be able to connect, secure, control, and observe the services running in the cluster.

- Connect: Control which service can connect to which service by enforcing security policies, access control rules, and rate limits.

- Secure: Secure the communication between the services using authentication, authorization, and traffic encryption.

- Control: Apply policies to the service and ensure that the resources are distributed fairly, and control with the routing rules the incoming traffic on different protocols such as HTTP, gRPC, and WebSocket.

- Observe: Monitor how the services are performing and servicing requests using automatic logging, requests tracing, and service health monitoring.

Istiod

The control plane consists of the Istiod core component; this component is responsible for providing the following tasks:

- Service discovery in the managed environment

- Istio configurations management

- Certification management: Istio acts as a certificate authority to enable secure mTLS communication between service.

- Translation of high-level routing rules and policies that are defined to control the service traffic to Envoy specific configurations.

- Propagation of the Envoy specific configurations to all sidecar containers at runtime.

The Istiod component consists of three sub-components. A brief overview of each of these modules is provided below:

Pilot

The Pilot is the component responsible for the communications between the control plane and the sidecar proxies. It provides service discovery (traffic management capabilities, and resilience support such as timeouts and retries). It also abstracts platform-specific service discovery techniques (such as Kubernetes, Consul, or VMs) and converts them into a standard format that can be used by sidecar containers.

Citadel

Citadel, also known as Istiod security component, enables service-to-service and service-to-end-user authentication and identity management. This component can be also used to upgrade unencrypted communication between the service to encrypted traffic. Furthermore and most importantly this component allows us to enforce the defined rules and policies based on the service identity instead of using layer 3 and 4 network identifiers.

Galley

The galley is the component that is responsible for configuration validation, processing, and distribution to other components. It is the only component that interacts with the underlying platform such as Kubernetes.

Istio Benefits

Building a robust, available and reliable microservice architecture software requires implementing several non-functional features and as a result, the development process will require either more time for implementing these features or more budget for hiring more engineers for implementing the features. Here it comes the role of the Istio platform as a tool that can decrease the complexity of developing and maintaining microservices.

To illustrate more, to be able to implement distributed tracing for a microservice software in a traditional way, you have to implement the distributed tracing functionally in every service in the software stack, test the communication between the services and make sure that the request ids are passed between the services each time an interaction is done. This is an ongoing task as long as the software is being maintained. On the other hand, distributed tracing is supported with Istio and can be easily integrated without any source code modification or changes. As a result, application developers can focus exclusively on business logic, functional features, and iterate quickly on new features.

Another advantage of using Istio is that the control of the communications between the services is done from a centralized control point and independent of the deployed services.

To wrap it up, Istio provides several benefits and solutions for the challenges of running microservice architecture software. These benefits cover topics such as observability, traffic inspection, granular policies, automation, and decoupling the network from the application code by letting the service mesh handle things like retry logic.

Using Istio

The core feature of Istio is Traffic Management. This core feature enables operators to control the network traffic between the micro-services, inbound traffic (ingress), and outbound traffic (egress). Managing the traffic is achieved using the following Istio traffic management API resources:

- Virtual services: A virtual service is used to configure an ordered list of routing rules to control how Envoy proxies route requests for service within an Istio service mesh.

- Destination Rules: Destination rules are used to configure the policies you want Istio to apply to a request after enforcing the routing rules in the virtual service.

- Gateways: Gateways are used to configure how the Envoy proxies load balance HTTP, TCP, or gRPC traffic. Also to manage inbound/outbound traffic.

- Service entries: A service entry can be used to add an entry to Istio’s abstract model that configures external dependencies of the mesh.

- Sidecars: A Sidecar can be used to configure namespace isolation for example. It controls the configuration of the sidecar Envoy Proxies used by Istio.

In this section, we are going to integrate Istio with the guestbook application (we will be using this Git repository) running on Kubernetes and evaluate some of Istio features.

Install Istio

First of all, we need to set up Istio following the official Installation Guide or simply by running the below commands.

$> curl -L https://istio.io/downloadIstio | sh -

$> cd istio-1.7.3

$> export PATH=$PWD/bin:$PATH

$> istioctl install --set profile=demo

$> kubectl apply -f samples/addonsThe above command will perform the following tasks

- Download the source code for the latest version of Istio.

- Install Istio with the demo profile which includes default values for starting with Istio.

- Install Istio addons and tools such as prometheus, grafana and Kiali

After executing the above command, you should be able to verify the creation of all the needed components on the cluster using the below command. All Deployments should be in 1/1 Ready state.

$> kubectl get deployments --namespace istio-system

NAME READY UP-TO-DATE AVAILABLE AGE

grafana 1/1 1 1 4m37s

istio-egressgateway 1/1 1 1 33s

istio-ingressgateway 1/1 1 1 6m23s

istiod 1/1 1 1 6m27s

jaeger 1/1 1 1 4m37s

kiali 1/1 1 1 4m37s

prometheus 1/1 1 1 4m35sThe next step is to create a new namespace and configure it with Istio labels. These labels will instruct Istio to automatically inject the envoy proxy for each of the deployed Pods in the guestbook namespace.

$> kubectl create namespace guestbook

$> kubectl label namespace guestbook istio-injection=enabledInstall Guestbook application

Now our cluster is ready to start deploying the guestbook app, The first step can be done using the below commands and it will create the needed resources on the cluster to run the guestbook app.

$> git clone git@github.com:eon01/istio-example.git

$> kubectl --namespace guestbook apply -f guestbook/step_1/After deploying the guestbook, you can verify the creation of the envoy proxy sidecars on the deployed Pods by checking the number of containers running inside the deployed Pods. The below can be used to perform this task

$> kubectl --namespace guestbook get pods

$> kubectl --namespace guestbook describe pod ${pod}At this stage, all resources for the guestbook application should be running on the cluster including the service resources and Redis Pods, however, the guestbook frontend is not reachable yet, since we haven't configured an Ingress Controller and the defined service is not exposing the service to the host machine.

Ingress Gateway

Ingress Gateways are Istio's replacement to the Kubernetes Ingress Resource. This type of resource is used to expose the internal services running on the Kubernetes cluster to the host nodes. Since we would like to access the guestbook frontend on a web browser, we need to create a Gateway resource for handling inbound traffic for the frontend service. The below command will create an Istio Gateway for the host guestbook.lvh.me. (Note that we are using lvh.me for local testing)

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: guestbook-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "guestbook.lvh.me"

EOFNote that we are using the Istio ingress gateway, which is exposed on port 80 on the nodes.

$> kubectl get services --namespace istio-system | grep ingres

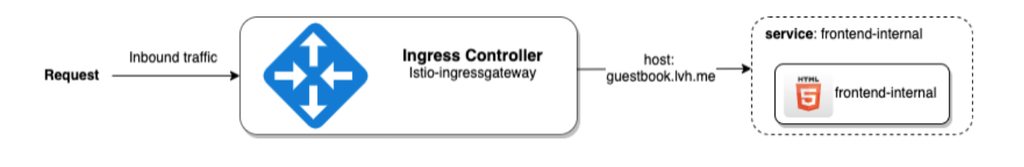

istio-ingressgateway LoadBalancer 10.101.188.201 localhost 15021:32443/TCP,80:30264/TCP,443:31880/TCP,31400:32758/TCP,15443:30301/TCP 2d5hWith the created Gateway, we have enabled inbound traffic on the guestbook.lvh.me to our cluster from the external world. However, the Gateway does not know where it should route the incoming requests. The below image taken from Istio’s official documentation shows the current status of the Gateway.

The next step is to create a VirtualService resource to enable access to the frontend service.

Virtual service objects can be used to achieve several tasks such as defining routing rules, delays, timeouts, HTTP redirections, and many more. For the complete list, you can check out this page. For our case we would like to define a simple routing rule, to forward the traffic from the defined gateway to the frontend-internal service.

The following command will create the needed Kubernetes object. As you can see the virtual service definition file includes the following sections:

- The virtual service host.

- The name of the gateway to be used.

- The match section: This section is used to filter which requests will be forwarded or served by the virtual service.

- The route destination configurations (service name and port)

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: guestbook-frontend

spec:

hosts:

- "guestbook.lvh.me"

gateways:

- guestbook-gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

port:

number: 80

host: frontend-internal

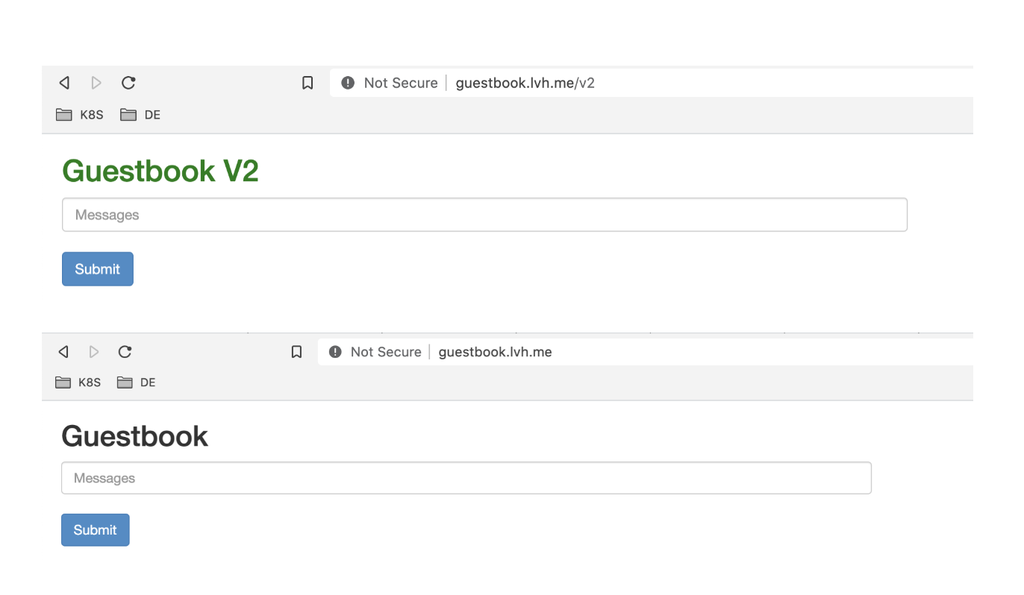

EOFAfter executing the above command, you should be able to see the guestbook interface on your browser if you visited the defined domain, as shown in the image below.

Our setup now looks like the below image; the gateway resource will forward the traffic on the defined hostname to the frontend-internal service on port 80.

Performing a curl command on the defined domain shows that the incoming requests are processed and controlled by the Istio envoy proxy and then forwarded to the guestbook application.

$> curl 127.0.0.1 -H "Host: guestbook.lvh.me" --head

HTTP/1.1 200 OK

date: Sun, 25 Oct 2020 10:16:53 GMT

server: istio-envoy

last-modified: Wed, 09 Sep 2015 18:35:04 GMT

etag: "399-51f54bdb4a600"

accept-ranges: bytes

content-length: 921

vary: Accept-Encoding

content-type: text/html

x-envoy-upstream-service-time: 8Traffic Routing

Routing based on URI (with precedence)

Virtual service can be used for more than simple request forwarding; in this section, we will reconfigure the virtual service to be able to route requests to different service versions based on the URI of the request.

To Illustrate more, we will create a new version of the virtual service that will consider the version included in the URI of the request to route the request to the correct guestbook version.

In order to achieve that, we need to edit the VirtualService we have created earlier with the following command.

$> kubectl --namespace guestbook apply -f guestbook/step_2/

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: guestbook-frontend

spec:

hosts:

- "guestbook.lvh.me"

gateways:

- guestbook-gateway

http:

- match:

- uri:

prefix: /v2

rewrite:

uri: "/"

route:

- destination:

port:

number: 80

host: frontend-internal-v2

- route:

- destination:

port:

number: 80

host: frontend-internal

EOFThe new virtual service will rewrite any URL with the prefix v2 by removing the prefix and then forward the request to the frontend-internal-v2 service. The rest of the requests will be forwarded directly to the frontend-internal service.

The new structure for our setup looks like the below image where virtual service is able to forward the requests to different services based on the prefix of the URI.

After applying this change, we can now see that http://guestbook.lvh.me/v2 will forward us directly to the version 2 interface of the guestbook application:

Routing based on HTTP headers

It's also possible to manage the routes based on the HTTP headers of the request. As an example, we will route all the requests with a specific header to guestbook version 2, and route all other requests to version 1 of the application.

The VirualService configuration below will route all requests with the HTTP header "source: terminal" to version 2, but route all other requests to version1:

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: guestbook-frontend

spec:

hosts:

- "guestbook.lvh.me"

gateways:

- guestbook-gateway

http:

- match:

- headers:

source:

exact: terminal

route:

- destination:

port:

number: 80

host: frontend-internal-v2

- route:

- destination:

port:

number: 80

host: frontend-internal

EOFSo if we send a couple of requests now, we can see that requests without the HTTP header, or with a value different from the terminal will be routed to the guestbook version1 interface while the requests that have terminal as a value for the source header will be forwarded to the version 2 as shown below.

# Responses from Version 1

$> curl -fs guestbook.lvh.me -H "source: x-terminal" | grep Guest

<title>Guestbook</title>

<h2>Guestbook</h2>

$> curl -fs guestbook.lvh.me | grep Guest

<title>Guestbook</title>

<h2>Guestbook</h2>

# Responses from Version 2

$> curl -fs guestbook.lvh.me -H "source: terminal" | grep Guest

<title>Guestbook</title>

<h2 style="color: GREEN;">Guestbook V2</h2Routing to different subsets of a service:

Another common routing use case is to balance the incoming requests between different versions of the same application. This use case is typical when there is a need to limit the access to the new versions to a subset of the users without completely exposing it.

In this section, we will reconfigure the virtual service to forward 20% of the requests to the v3 of the service and the rest to the v1.

First, we need to clean up the setup and remove the old service and create the guestbook version 3 using the following commands.

$> kubectl --namespace guestbook delete -f guestbook/step_2/

$> kubectl --namespace guestbook delete -f guestbook/step_3/The below command will take care of reconfiguring the virtual service to balance the incoming requests between the versions of the guestbook. Note that we are forwarding the requests to the same service but with different values for the subset configurations item.

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: guestbook-frontend

spec:

hosts:

- "guestbook.lvh.me"

gateways:

- guestbook-gateway

http:

- route:

- destination:

port:

number: 80

host: frontend-internal

subset: v3

weight: 70

- destination:

port:

number: 80

host: frontend-internal

subset: v1

weight: 30

EOFIn the above command, we used the subset configuration to forward the requests to the destinations; therefore, we must define a DestinationRule to enable the end to end communications, Otherwise, all incoming requests will fail.

The following command will create the needed rule to define the subsets based on the labels of the deployed Pods.

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: guestbook-destination

spec:

host: frontend-internal

subsets:

- name: v1

labels:

version: v1

- name: v3

labels:

version: v3

EOFNetwork Resilience

Istio provides resources to configure network timeouts and retry policies for services without the need for changing the application code or configuration. In addition, it allows us to introduce network delays and also failures to test the resiliency of our applications.

In this section, we will configure our services with HTTP delay and request timeouts as examples.

Injecting HTTP Delay

Introducing a delay on a given service is a straightforward task and can be done by updating the virtual service resource object and adding a fault dealy section. The below command will update the guestbook-frontend virtual service and introduce a 10 seconds delay on all the incoming requests.

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: guestbook-frontend

spec:

hosts:

- "guestbook.lvh.me"

gateways:

- guestbook-gateway

http:

- fault:

delay:

percent: 100

fixedDelay: 10s

route:

- destination:

host: frontend-internal

EOFWe can see that requests going to the guestbook application are affected by the 10s delay after applying the new changes using the below commands, which shows the time needed to processes the same request before and after applying the changes. It is clear that the total time required to respond to the request increased from 0.027 seconds to 10.034 seconds.

# Before applying the delay

$> time curl http://guestbook.lvh.me --head

HTTP/1.1 200 OK

date: Sun, 25 Oct 2020 20:47:56 GMT

server: istio-envoy

last-modified: Wed, 09 Sep 2015 18:35:04 GMT

etag: "399-51f54bdb4a600"

accept-ranges: bytes

content-length: 921

vary: Accept-Encoding

content-type: text/html

x-envoy-upstream-service-time: 3

curl http://guestbook.lvh.me --head 0.01s user 0.01s system 54% cpu 0.027 total

# After applying the delay

$> time curl http://guestbook.lvh.me --head

HTTP/1.1 200 OK

date: Sun, 25 Oct 2020 20:48:30 GMT

server: istio-envoy

last-modified: Wed, 09 Sep 2015 18:35:04 GMT

etag: "399-51f54bdb4a600"

accept-ranges: bytes

content-length: 921

vary: Accept-Encoding

content-type: text/html

x-envoy-upstream-service-time: 5

curl http://guestbook.lvh.me --head 0.01s user 0.01s system 0% cpu 10.034 totalRequest Timeouts

We can also configure a custom timeout with Istio on the guestbook service to avoid long-running requests. By doing this, requests will time out only after 2s, and will not wait for the 10s HTTP delay that we have injected earlier:

$> kubectl --namespace guestbook apply -f - << EOF

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: guestbook-frontend

spec:

hosts:

- "guestbook.lvh.me"

gateways:

- guestbook-gateway

http:

- route:

- destination:

port:

number: 80

host: frontend-internal

subset: v3

weight: 70

- destination:

port:

number: 80

host: frontend-internal

subset: v1

weight: 30

timeout: 2s

EOFObservability and Kiali Visualizations

Istio provides us with tools that can be used to observe the interactions between the services running on a Kubernetes cluster and access logs as well as exposed metrics about the running services.

The envoy proxy injected in each of the running Pods is exposing metrics, logs, and communication data about the corresponding Pod. This data is scraped by Prometheus and then can be visualized and displayed on Grafana or Kiali.

Kiali is a management console for Istio-based service mesh. It is used to show the infrastructure of the services deployed to the Istio service mesh and provides tools, dashboards, and observability for monitoring the running services.

At this stage, you can start using Kiali simply by exposing the internal interface using the below command.

$> kubectl port-forward -n istio-system service/kiali 20001:20001After exposing the Kiali to the host, you can visit the Kiali console on the following link http://localhost:20001/ and view the service graph as shown below

Once you start using the guestbook interface, Istio will update the graph of the service with the new connections from the frontend to the Redis service.

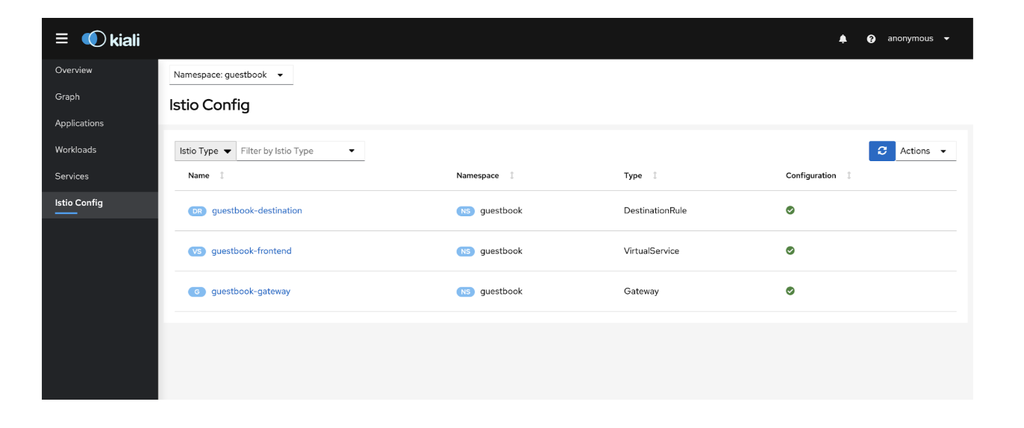

Kiali does not only provide the dependency graph visualization. For instance, you can inspect the deployed application, services, and workloads. In addition, you can monitor the service traffic, logs, and service metrics.

It is also possible to view and edit the Istio resource objects denied in the cluster, as shown in the below image.

Logs

The envoy proxy sidecar running in every Pod provides us with access logs for the running applications. This gives us better visibility about the inbound and outbound traffic for each micro-service. Below is an example of the access logs.

You can also see the logs directly using the kubectl command as shown below

$> kubectl --namespace guestbook logs frontend-v1-577b8bfdd9-hr9w7 istio-proxy | grep -E "inbound|outbound" | tail -2 (2.6.3)

[2020-10-27T20:10:54.473Z] "- - -" 0 - "-" "-" 27 31 29 - "-" "-" "-" "-" "10.1.0.59:6379" outbound|6379||redis-slave.guestbook.svc.cluster.local 10.1.0.60:41554 10.96.178.195:6379 10.1.0.60:58148 - -

[2020-10-27T20:10:55.986Z] "- - -" 0 - "-" "-" 27 31 11 - "-" "-" "-" "-" "10.1.0.62:6379" outbound|6379||redis-slave.guestbook.svc.cluster.local 10.1.0.60:36768 10.96.178.195:6379 10.1.0.60:58200 - -Conclusion

Istio is not only a service mesh but a platform on top of which you can run your microservices relying on the multiple features it provides such as traffic routing, network resilience, logging, and metrics.

In this blog post, we have tried to highlight some of those features; however, this post is not an extensive walkthrough of Istio that provides more features like distributed tracing, security (authorization, enforcing network policies, mTLS), etc..

To sum up, Istio platform and tools come with a range of advantages and benefits for running a microservice software on Kubernetes. First of all, the provided tools will give you more control and offer an out of box solutions for observability and monitoring of the services running in the cluster.

Second, the integration and deployment of these tools are relatively straightforward and follow the same techniques and tools used to deploy services to Kubernetes (resources are defined in YAML files).

Get similar stories in your inbox weekly, for free

Share this story:

Cloudplex

Founder and CEO of Cloudplex - We make Kubernetes easy for developers.

Latest stories

Best Cloud Hosting in the USA

This article explores five notable cloud hosting offers in the USA in a detailed way.

Best Dedicated Hosting in the USA

In this article, we explore 5 of the best dedicated hosting providers in the USA: …

The best tools for bare metal automation that people actually use

Bare metal automation turns slow, error-prone server installs into repeatable, API-driven workflows by combining provisioning, …

HIPAA and PCI DSS Hosting for SMBs: How to Choose the Right Provider

HIPAA protects patient data; PCI DSS protects payment data. Many small and mid-sized businesses now …

The Rise of GPUOps: Where Infrastructure Meets Thermodynamics

GPUs used to be a line item. Now they're the heartbeat of modern infrastructure.

Top Bare-Metal Hosting Providers in the USA

In a cloud-first world, certain workloads still require full control over hardware. High-performance computing, latency-sensitive …

Top 8 Cloud GPU Providers for AI and Machine Learning

As AI and machine learning workloads grow in complexity and scale, the need for powerful, …

How ManageEngine Applications Manager Can Help Overcome Challenges In Kubernetes Monitoring

We tested ManageEngine Applications Manager to monitor different Kubernetes clusters. This post shares our review …

AIOps with Site24x7: Maximizing Efficiency at an Affordable Cost

In this post we'll dive deep into integrating AIOps in your business suing Site24x7 to …

A Review of Zoho ManageEngine

Zoho Corp., formerly known as AdventNet Inc., has established itself as a major player in …